Here is a blog post tailored for My Core Pick.

Stop Digging, Start Asking: How RAG Transforms Unstructured Enterprise Data

I want you to picture a scenario. It’s 2:00 PM on a Tuesday.

You are looking for a specific clause in a vendor contract signed three years ago.

You know it exists. You remember the email chain. You remember the Slack message where your boss gave the thumbs up.

But you can’t find it.

You search "Vendor Contract." Nothing helpful. You search the vendor's name. You get 400 results.

You start opening PDFs one by one. You dig through archived emails. You waste forty-five minutes just trying to locate information you already own.

This is the "Digging Paradigm." And frankly, it is killing enterprise productivity.

We are drowning in data, but starving for insights.

But there is a shift happening right now. We are moving away from digging and moving toward asking.

It’s called RAG (Retrieval-Augmented Generation), and it is the single most important development for enterprise data management since the search bar.

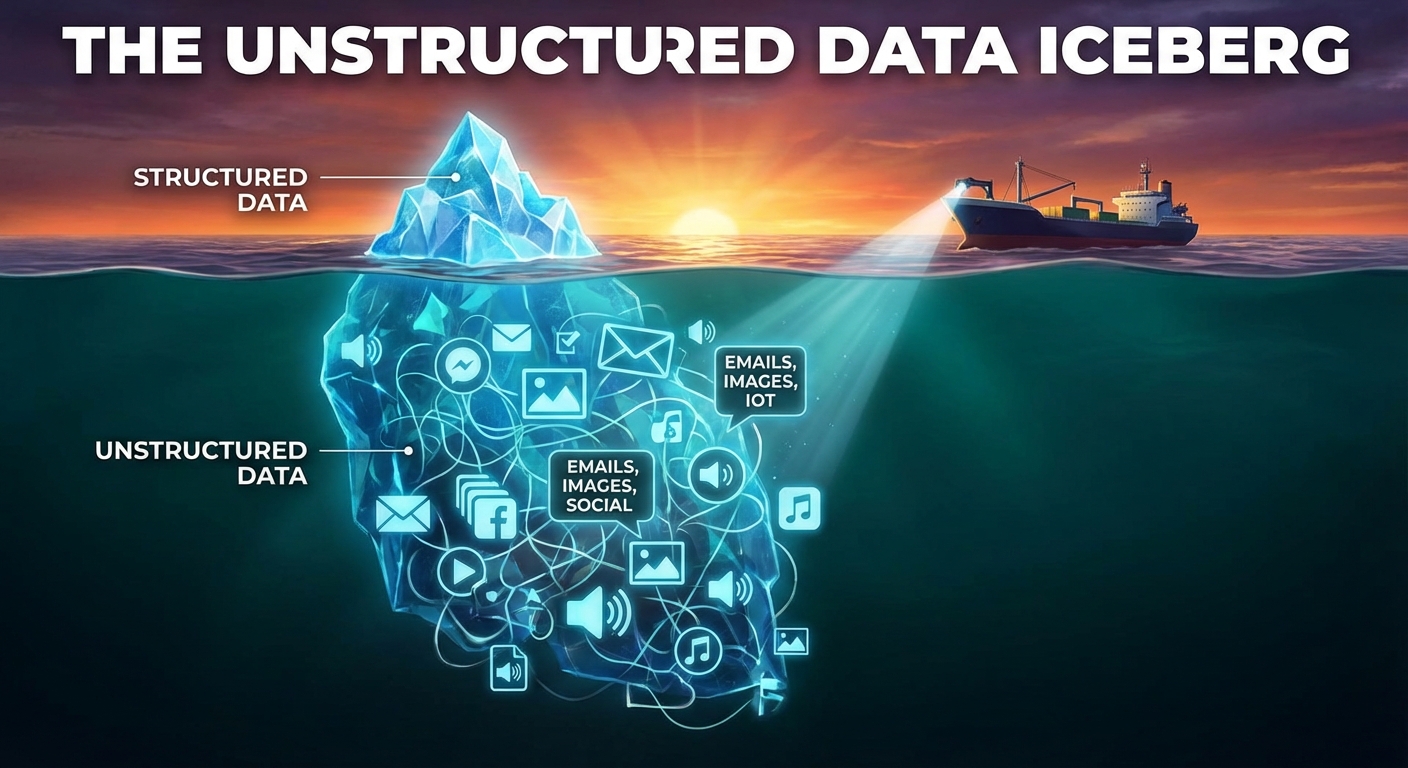

The Problem: The Unstructured Data Iceberg

Here is a statistic that keeps me up at night.

Ideally, about 80% of enterprise data is "unstructured."

Structured data is easy. It lives in Excel sheets, SQL databases, and CRM fields. It fits in rows and columns. It is neat.

Unstructured data is messy.

What lies beneath the surface

Unstructured data includes PDFs, internal wikis, lengthy email threads, Slack conversations, video transcripts, and slide decks.

This is where the actual work happens. This is where the context lives.

But traditional search tools are terrible at reading it.

Standard enterprise search relies on keywords. If you don't type the exact keyword that appears in the document, you are out of luck.

It’s like trying to find a book in a library where all the covers have been ripped off.

The generic AI limitation

So, you might think, "Why not just use ChatGPT?"

Public Large Language Models (LLMs) are incredible, but they have two fatal flaws for business.

First, they don't know your secrets. They haven't read your internal memos or your proprietary code.

Second, they hallucinate. If they don't know the answer, they might make one up just to please you.

In a business context, a hallucination isn't a quirk. It is a liability.

This is where RAG bridges the gap.

Enter RAG: The "Open Book" Test

Let’s strip away the jargon for a moment.

To understand Retrieval-Augmented Generation (RAG), think back to your school days.

Using a standard LLM (like generic GPT-4) is like taking a test from memory.

The student is smart. They have read a lot of books. But if you ask them about a specific event that happened this morning, they won't know it. Their memory is frozen in time.

Giving the AI a cheat sheet

RAG changes the rules of the test.

RAG allows the student to take an "open book" test.

When you ask a question, the AI doesn't just rely on its training memory.

Instead, it pauses. It runs over to your company’s private filing cabinet. It pulls out the exact documents relevant to your question.

Then, it reads them instantly.

Finally, it formulates an answer based only on those documents.

The result

The answer is accurate because it cites your data.

It is up-to-date because it reads what you uploaded five minutes ago.

And crucially, it stops digging. You didn't have to find the document. You just had to ask the question.

How It Works: Under the Hood

I promise not to get too technical here. But understanding the mechanism helps you see the value.

RAG isn't magic. It is a three-step workflow.

1. Indexing (The Vector Database)

First, we take all that messy unstructured data—your PDFs, HTML files, and text docs.

We slice them into small chunks.

Then, we turn those chunks into "vectors."

A vector is just a long list of numbers that represents the meaning of the text, not just the keywords.

For example, a keyword search sees "Car" and "Automobile" as different. A vector search understands they are semantically identical.

We store these vectors in a specialized database.

2. Retrieval (The Hunt)

Now, you type a query: "What is our policy on remote work for contractors?"

The system turns your question into a vector.

It looks through the database for chunks of text that are mathematically similar to your question.

It retrieves the top 3 or 5 most relevant paragraphs from your company handbook.

3. Generation (The Synthesis)

This is the cool part.

The system sends a prompt to the LLM that looks like this:

"User asked: What is the remote work policy? Here is the context found in the database: [Insert Retrieved Paragraphs]. Answer the user using ONLY this context."

The LLM acts as a summarizer and synthesizer.

It delivers a plain English answer, often with citations pointing to the source PDF.

Why This Changes Everything

I believe this technology transforms the employee experience fundamental level.

It shifts us from being "searchers" to "knowers."

Here are three specific ways I see this playing out in the real world.

1. The death of the "Hallucination" fear

One of the biggest blockers to enterprise AI adoption is trust.

Executives are terrified that an AI will invent financial figures.

With RAG, you can force the AI to show its work.

If the system can't find the answer in the retrieved documents, it can be programmed to say, "I don't know," rather than making something up.

This grounding makes AI safe for business use.

2. Instant onboarding

Imagine a new hire on their first day.

Usually, they spend weeks tapping colleagues on the shoulder asking, "How do I request PTO?" or "How do I configure the VPN?"

With a RAG-enabled chatbot, they just ask the system.

The system pulls from the Confluence page, the HR PDF, and the IT Slack channel history.

The new hire gets an instant answer. The senior engineers don't get interrupted.

3. Customer support on steroids

Your support agents are likely drowning in documentation.

When a customer asks a complex technical question, the agent usually puts them on hold to dig through manuals.

With RAG, the agent types the question. The system retrieves the technical manual, the release notes, and the bug report.

It generates a suggested response in seconds.

We are talking about reducing resolution times by 50% or more.

Getting Started: Don't Boil the Ocean

If you are thinking about implementing this, I have some advice.

Do not try to index everything at once.

RAG is powerful, but it relies on "Garbage In, Garbage Out."

Start with high-value data

Pick a specific domain. HR policies are a great place to start. Or perhaps technical documentation for a specific product.

Clean that data. Ensure the documents are current.

If you feed the system conflicting policies from 2019 and 2024, the AI will get confused.

Security is paramount

This is the big one.

If you index your CEO’s private emails and the payroll spreadsheets, and then give a chat interface to the interns, you have a problem.

Your RAG system must respect Access Control Lists (ACLs).

The retrieval step should only pull documents that the specific user is authorized to see.

Never skip this step.

The Future is Conversational

We are moving toward a world where the interface for software is simply natural language.

We won't navigate menus. We won't filter columns. We won't utilize advanced search syntax.

We will just talk.

"Show me the sales trends from last week compared to the Q3 projections."

"Summarize the risks outlined in these five legal contracts."

"Draft an email explaining the delay based on the engineering team's update."

RAG is the engine that makes this possible.

It turns your dusty, unstructured, forgotten data into a living, breathing knowledge base.

So, stop digging through folders. Stop reading 50-page PDFs to find one sentence.

Start asking.

Your data is ready to answer.