Here is the blog post, written in the "My Core Pick" style.

Teaching AI to Speak Your Language: How RAG Turns Generic Chatbots into Business Experts

We’ve all had that moment of amazement.

You type a prompt into ChatGPT or Claude, and it spits back a Shakespearean sonnet about a toaster.

It’s impressive. It feels like magic.

But then, you try to use that same magic for your business.

You ask the AI, "What is our refund policy for the Q3 customized widgets?"

The AI pauses.

Then, it either politely tells you it doesn't know, or worse—it hallucinates.

It confidently invents a refund policy that doesn't exist, promising your customer a 100% cash-back guarantee that definitely isn't in your handbook.

Here at My Core Pick, we see this friction every day.

Business owners want the power of AI, but they need it to know their specific data, not just general internet trivia.

This is where RAG comes in.

RAG stands for Retrieval-Augmented Generation.

It sounds like a mouthful of tech jargon, but the concept is actually incredibly simple.

It is the bridge that turns a generic, know-it-all chatbot into a specialized expert on your specific business.

Today, I’m going to break down exactly how it works, why it’s better than "training" a model, and how you can use it to give your AI a brain upgrade.

The "Brilliant Intern" Problem

To understand RAG, you first have to understand the limitation of standard Large Language Models (LLMs).

Think of an LLM like a brilliant, freshly graduated intern.

They have read every book in the public library.

They know history, coding, french poetry, and how to write a marketing email.

But on their first day at your office, they are useless at specific tasks.

They don’t know your internal wikis.

They haven't read your customer support logs.

They don’t know that "Project Alpha" was cancelled last Tuesday.

If you ask this intern a specific question about your company, they have two choices.

They can say "I don't know."

Or, because they want to impress you, they might guess.

In the AI world, that guess is called a "hallucination," and in business, it’s dangerous.

You cannot have an automated system making up facts about pricing or compliance.

So, how do we fix this?

Many people assume the answer is "fine-tuning," or retraining the brain of the AI.

But that is expensive, slow, and requires massive amounts of data.

It’s like sending that intern back to college for four years just to learn your employee handbook.

There is a much smarter way.

You just hand the intern the handbook and say, "Read this before you answer."

That is exactly what RAG does.

Enter RAG: The Open-Book Exam

Let’s change the metaphor slightly.

Standard AI is like a student taking a test from memory.

If they memorize the wrong date, they get the answer wrong.

RAG turns that test into an open-book exam.

When you ask a question, the AI doesn't just look inside its own brain.

First, it runs to your company's library (your data).

It finds the specific page that discusses your question.

Then, it comes back to you and says:

"Based on the documents you gave me, here is the answer."

This changes everything.

It means the AI isn't relying on training data from two years ago.

It is relying on the PDF you uploaded five minutes ago.

It anchors the AI in reality—your reality.

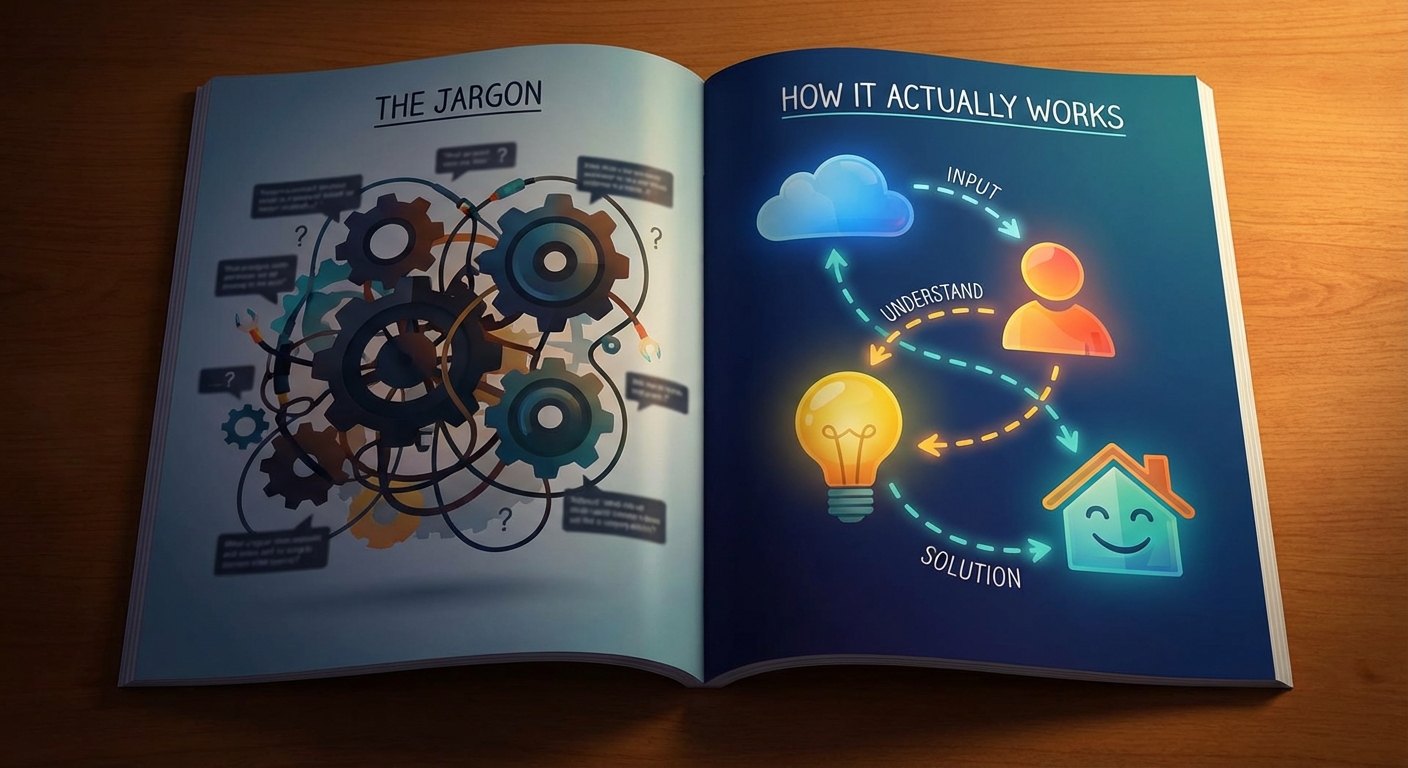

How It Actually Works (Without the Jargon)

I promised to keep this non-technical, but it helps to know the workflow.

When we implement RAG solutions, we generally follow a three-step process.

It happens in milliseconds, but here is what’s going on under the hood.

1. The Indexing Phase (The Librarian)

Before the AI can answer, we have to organize your data.

We take your PDFs, Word docs, Notion pages, and Slack history.

We chop them up into small chunks of text.

Then, we turn those chunks into "vectors."

A vector is just a long list of numbers that represents the meaning of the text.

We store these numbers in a Vector Database.

Think of this like a super-organized library card catalog that understands concepts, not just keywords.

2. The Retrieval (The Search)

Now, a user asks a question: "How do I reset the pilot light on the Model X heater?"

The system doesn't send this to ChatGPT yet.

First, it converts your question into numbers (vectors).

It searches your Vector Database for text chunks that are mathematically similar to your question.

It finds the exact paragraph in your technical manual about the Model X pilot light.

3. The Generation (The Answer)

This is the magic moment.

The system takes your user's question.

It also takes the paragraph it found in the manual.

It pastes them both into a prompt for the AI.

It essentially tells the AI: "Using ONLY this paragraph from the manual, answer the user's question about the pilot light."

The AI reads the text and generates a fluent, human-like answer.

But the facts come strictly from your data.

Why Your Business Needs This Yesterday

You might be thinking, "Can't I just use keyword search?"

You could, but keyword search is dumb.

If a user searches for "broken screen," but your manual calls it a "cracked display," keyword search fails.

RAG understands that "broken" and "cracked" mean the same thing in this context.

Here is why we at My Core Pick believe RAG is the future of business automation.

Accuracy and Trust

This is the biggest factor.

With RAG, you can force the AI to cite its sources.

The chatbot can say, "I found this answer in the 'Q3 Policy Update', page 4."

If the AI can't find the answer in your documents, it can be programmed to say, "I don't have that information."

It eliminates the lying.

Data Privacy and Security

This is a major concern for our enterprise clients.

You do not want to upload your proprietary trade secrets into a public model like ChatGPT to "train" it.

With RAG, your data stays in your database.

You only send small snippets of text to the AI model at the moment of the query.

The underlying model doesn't "learn" your data; it just processes it temporarily.

Real-Time Updates

Imagine you change your prices today.

If you "fine-tuned" a model, you would have to pay thousands of dollars to re-train it to learn the new prices.

With RAG, you simply update the document in your database.

The very next time someone asks a question, the AI retrieves the new document.

It is instant.

Real-World Magic: RAG in Action

So, what does this look like in the wild?

Here are three scenarios where we are seeing RAG revolutionize workflows.

The "Super" Customer Support Agent

Imagine a support bot that actually helps.

A customer asks, "Does this software integrate with Salesforce?"

The bot retrieves the API documentation and the integration guide.

It answers, "Yes, we support Salesforce via our REST API. Here is the link to the setup guide."

It deflects tickets from human agents, saving you massive amounts of time.

The Internal Knowledge Base

We all have that one employee who knows where every file is saved.

When they go on vacation, the office halts.

RAG creates a "Corporate Brain."

New hires can ask, "How do I submit an expense report?" or "What is the brand color code?"

The AI pulls from the HR notion page and the Brand Guidelines PDF instantly.

Onboarding becomes a breeze.

Legal and Compliance Assistant

Lawyers have to read thousands of pages of contracts.

With RAG, a legal team can upload 500 contracts and ask:

"Which of these contracts have a termination clause of less than 30 days?"

The AI retrieves the specific clauses from the relevant documents and lists them out.

It turns days of reading into seconds of processing.

Getting Started: It’s Not Rocket Science

The best part about RAG is that the barrier to entry is dropping fast.

A year ago, you needed a team of Python engineers to build this.

Today, the ecosystem is thriving.

Tools like LangChain and LlamaIndex provide the framework.

Vector databases like Pinecone or Weaviate handle the storage.

There are even "no-code" platforms emerging that let you drag and drop your PDFs to create a RAG chatbot in minutes.

You don't need to be a tech giant to afford this.

You just need organized data and a willingness to experiment.

Conclusion

We are moving past the "hype" phase of AI and into the "utility" phase.

Generating poems is fun.

But generating accurate answers from your own business data is profitable.

RAG is the technology that makes AI safe for work.

It respects your data privacy.

It stops the hallucinations.

And it allows you to scale your expertise instantly.

If you are looking to integrate AI into your business, stop trying to train the model.

Just teach it how to read your library.

Your customers (and your bottom line) will thank you.